Autonomous SLAM of an environment Using a RTABMap on Legged Robots

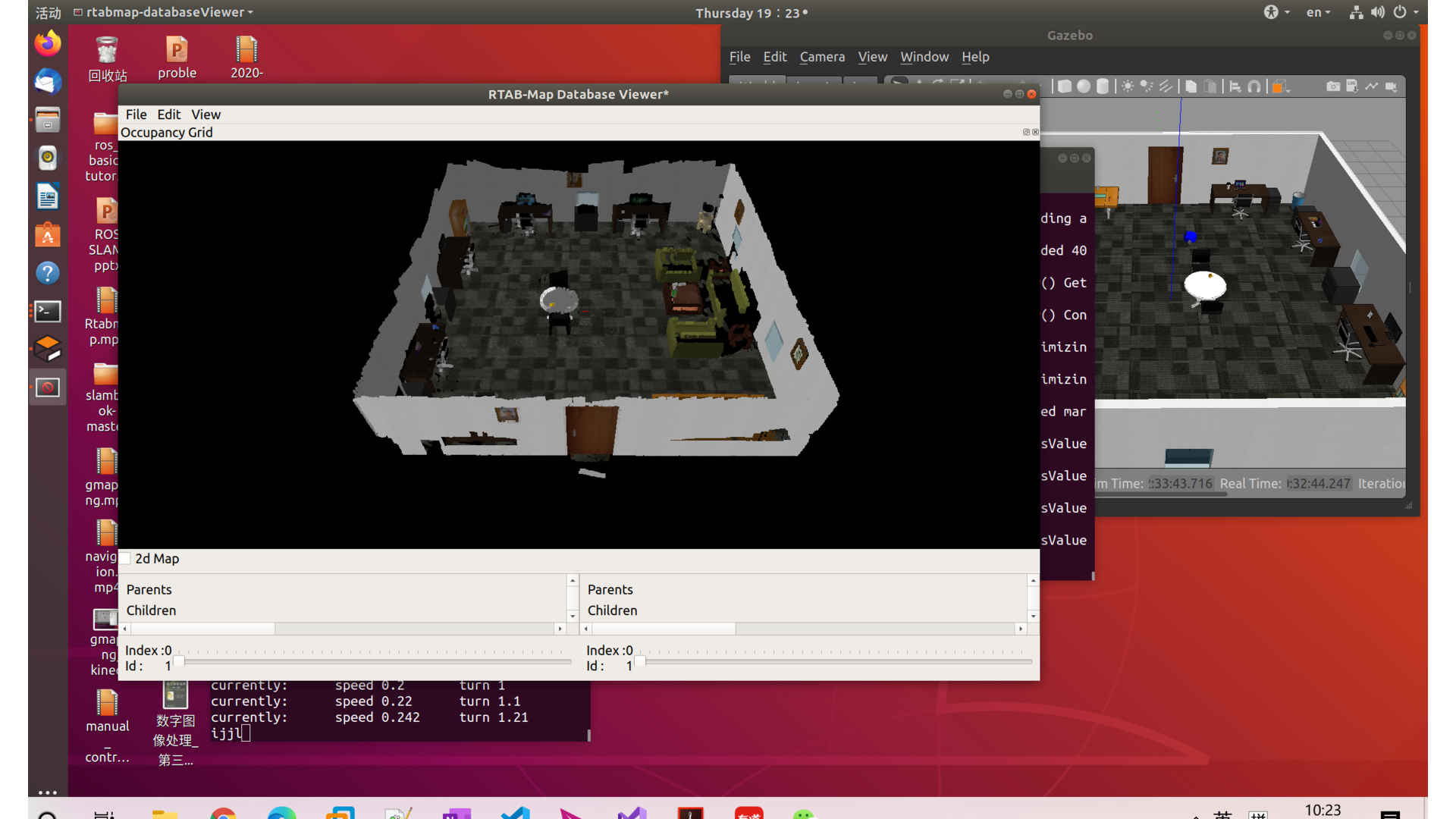

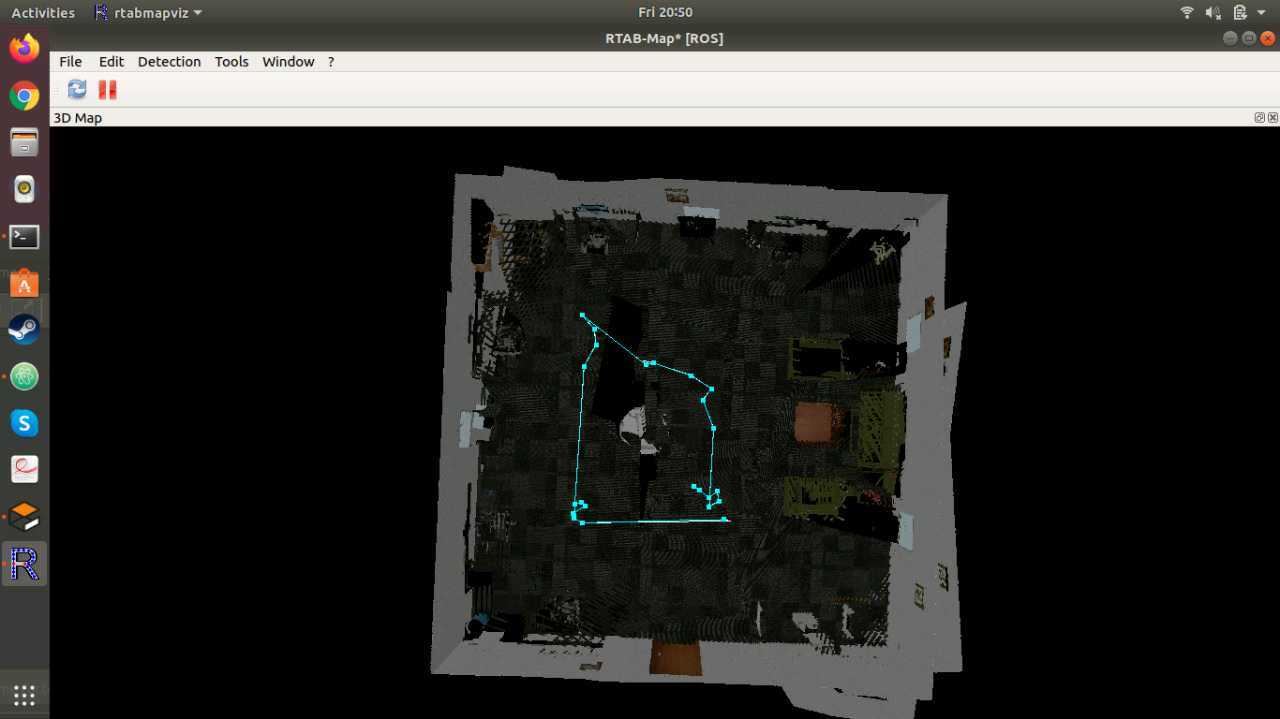

The objective of the work is to create a framework for legged robots to autonomously perform Sinultaneous Localization And Mapping of their environment. We work on the Hebi Daisy hexapod, using Intel Realsense D435 camera for depth estimations to perform SLAM, and the Realsense T265 camera for localization. To begin with, we simulate the environment and framework on Gazebo ROS, and use the Real Time Appearance Based Mapping (RTABMap) Framework. For footfall planning, we create a local height map using the Octomap framework, and use Sobel filters to calculate the slope of the ground. Currently we are looking into using RL based gait generation instead of using Centeral Pattern Generators, and integrating the current footstep planning method with the developed CPG.